In today's fast-paced business environment, leveraging Framesoft Artificial Intelligence (FAI) in contract management offers transformative benefits that can significantly enhance operational efficiency, reduce risks, and unlock strategic insights. FAI revolutionizes the way organizations manage contracts, from analysis to compliance, providing a competitive edge that is indispensable for businesses aiming to thrive.

Key Benefits

1. Insights and Applications

Framesoft Artificial Intelligence (FAI) automates the tedious and time-consuming tasks of contract review, data extraction and reconciliation. By significantly reducing the manual effort involved in these processes, businesses can allocate their resources more effectively, focusing on strategic initiatives rather than administrative tasks. This operational efficiency translates into substantial cost & time savings.

2. Enhanced Accuracy and Reduced Risks

The precision of FAI in analyzing complex legal documents minimizes the risk of human error, ensuring that key terms, obligations, and compliance requirements are accurately identified.

3. Improved Contract Visibility and Compliance

FAI provides unparalleled visibility into contract portfolios, enabling businesses to easily track and manage their contractual obligations, rights, and risks. This enhanced oversight ensures that contracts are consistently in compliance with the data items stored in FCR.

4. Competitive Advantage and Strategic Insights

By harnessing FAI, businesses can unlock valuable insights into contract performance, relationships, and market opportunities. This data-driven approach facilitates more informed strategic decisions, helping to identify trends, optimize contract terms, and negotiate from a position of strength. The competitive advantage gained through Framesoft Artificial Intelligence (FAI) is a game-changer, enabling businesses to stay ahead.

5. Seamless Integration and Scalability

FAI is integrated into FCR and its workflows, ensuring a seamless transition and immediate efficiency gains. FAI allows it to handle an increasing volume of contracts with consistent accuracy, supporting business growth.

How Framesoft Artificial Intelligence (FAI) supports Contract Management

1. Document Digitalization & Text Extraction

(AI-driven) Optical Character Recognition (OCR) technology is used to digitize physical documents or parse digital formats. Preprocessing techniques improve the quality of text extraction & handling issues like skewed pages, different fonts, and layouts.

2. Understanding Contracts

FAI analyses the semantics of the text to understand the context and meaning of clauses within agreements. This involves parsing legal language, identifying definitions, obligations, rights, and conditions. FAI identifies and categorize key entities in the text, such as parties involved, financial terms, dates, and jurisdictional information.

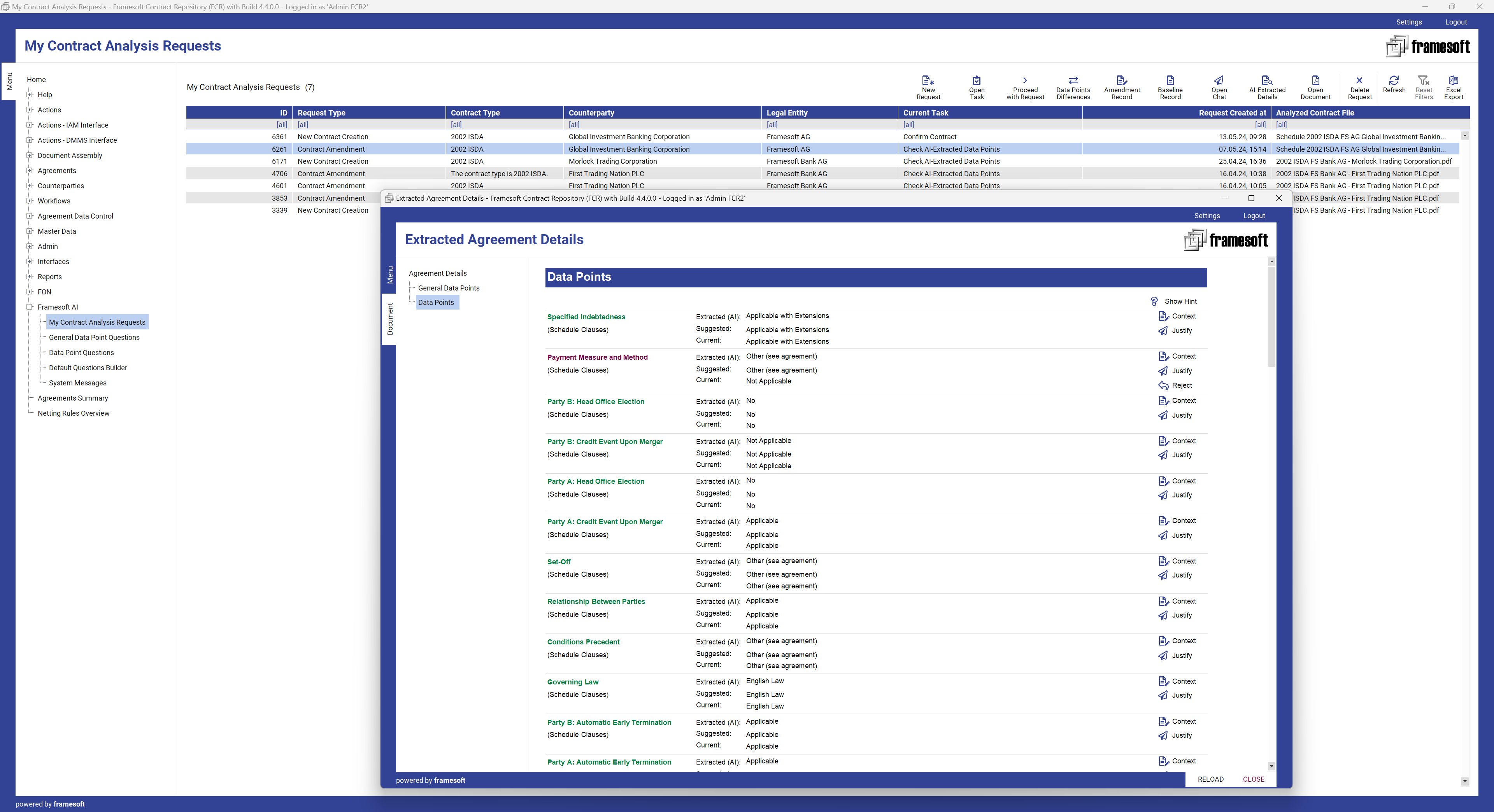

3. Data Extraction

FAI is trained on legal documents to extract essential data items from agreement types stored in FCR, such as e.g., termination events, collateral arrangements, and netting provisions. Extracted data is normalized to ensure consistency across documents. This step is vital for accurate comparison and reconciliation with existing data items in Framesoft Contract Repository (FCR).

4. Agreement Type & Agreement Identification

Based on the data extraction FAI checks if the agreement already exist in FCR:

- If the agreement exists, the agreement data items stored in FCR will be compared with the clauses extracted from the document by FAI.

- If the agreement does not exist a new agreement is created automatically.

FAI automatically detects and flags anomalies or differences between the contract document data items and FCR repository data items, prioritizing them based on predefined rules or their potential impact.

5. Reconciliation with Framesoft Contract Repository (FCR)

Framesoft Artificial Intelligence (FAI) compares extracted data items from agreements with clauses in FCR. This involves matching contract terms, parties, and conditions to identify discrepancies or inconsistencies. The results will be presented in FCR Compare screens and can be applied or rejected.

6. Generative AI & Large Language Model

FAI is based on “Generative AI” & “Large Language Model” and fine-tuned via prompt engineering. Therefore, FAI does not require any customer specific training on legal documents and no client data is used for AI training purposes.

7. Workflow Automation

Automated workflows can be established where FAI triggers actions based on the analysis outcomes, such as updating records, generating compliance reports, or initiating review processes for anomalies.

Transforming Contract Management with AI-Powered Efficiency

Framesoft Artificial Intelligence (FAI) is more than just a tool; it is a transformative solution designed for any organization that deals with contracts regularly. FAI offers significant advantages, making contract management more efficient, secure, and strategic.

Embrace the Future of Contract Management with Framesoft Artificial Intelligence (FAI)

For more information about Framesoft Artificial Intelligence (FAI) or to request a demo, please contact us at via e-Mail